What:

It’s a method of supervised ML for classification. It works on top of Bayes Theorem with the following idea:

Given past observations of this thing, how likely is it to belong to a certain category.

How:

- We collect labelled data (e.g. spam / not spam emails).

- We count how often certain words appear in each.

- We use that and then calculate the probability that certain words appear in either email (using Bayes Theorem).

- Now, we can make predictions for new emails using a rearranged version of the formula.

Classification (Semi) Mathematically?

Suppose we classify emails as spam or not spam based on word presence. Given an email containing words “cheap”, “Viagra”, and “discount”, we compute:

Using Bayes’ Theorem and the independence assumption, we estimate:

If this probability is higher than the probability of being “not spam”, we classify it as spam.

Training Very Mathematically:

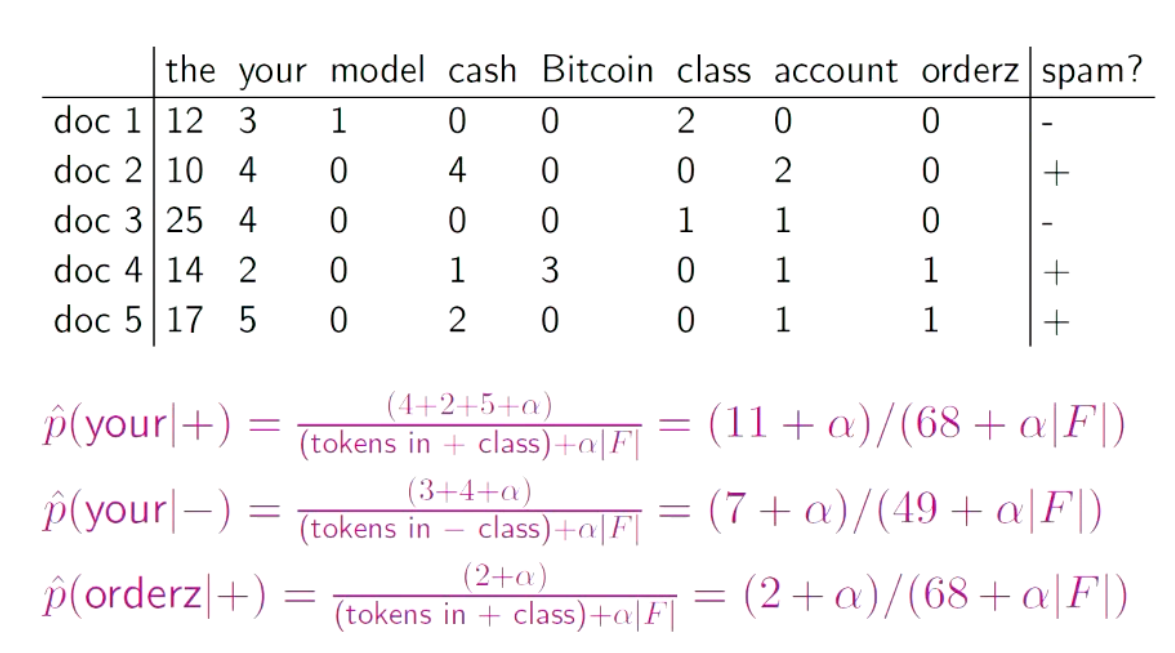

- normally estimated with simple -smoothing (Laplace smoothing):$$

\hat{p}(f_i|y) = \frac{\text{count}(f_i, y) + \alpha}{\sum_{f \in F} (\text{count}(f, y) + \alpha)} - $\text{count}(f_i, y)$ = the number of times $f_i$ occurs in class $y$ - $F$ = the set of possible features - $\alpha$: the smoothing parameter, optimised on held-out data

Example:

Wdym Naïve?

Naive Bayes assumes that the features are independent from each other. But in reality, if an email has “win”, it will likely also have “prizes”. BUT, those words individual words are enough to be highly indicative of categories, even when taking on their own (albeit “win prizes” is a dead giveaway, “win” and “prizes” is still indicative enough).

✅ Naive Assumption Works For:

- Text classification (surprisingly)

- Medical Diagnoses

- Very fast to train and test + requires less training data.

❌ Naive Assumption Fails For:

- Wherever strong feature interdependence exists

- Image classification

- Because every pixel heavily relies on nearby ones, where NB assumes every pixel is independent

- When a feature appears in the testing data that has never appeared in the training data, then the probability is likely zero.

- We solve this by adding a constant (~) to the formula, thus we almost count as if every word has been seen.

- The Naive assumption means that