It’s annoying cos it’s actually so deceptively simple.

What:

In a Neural Network, you need a loss function that quantifies just how terribly your model did. A really common way for Classification problems is Cross-Entropy Loss. (Basically synonymous with Negative Log Likelihood).

How?

- You take an example from every class . You calculate the predicted confidence of it being that class ().

- You plug that into: . (where is the true label).

- You sum all of the losses together (and then invert the sign).

- That’s your total Cross Entropy Loss.

Mathematically:

where:

- is the predicted probability of class (often from a softmax function in classification problems).

- is the actual (true) label, typically represented as a one-hot encoded value (i.e., if the sample belongs to class , otherwise ).

- is the total number of samples in the dataset.

- The summation iterates over all samples in the dataset.

- The logarithm ensures that incorrect predictions result in higher penalty values.

- The negative sign ensures the loss is minimised when predicted probabilities align closely with the true labels.

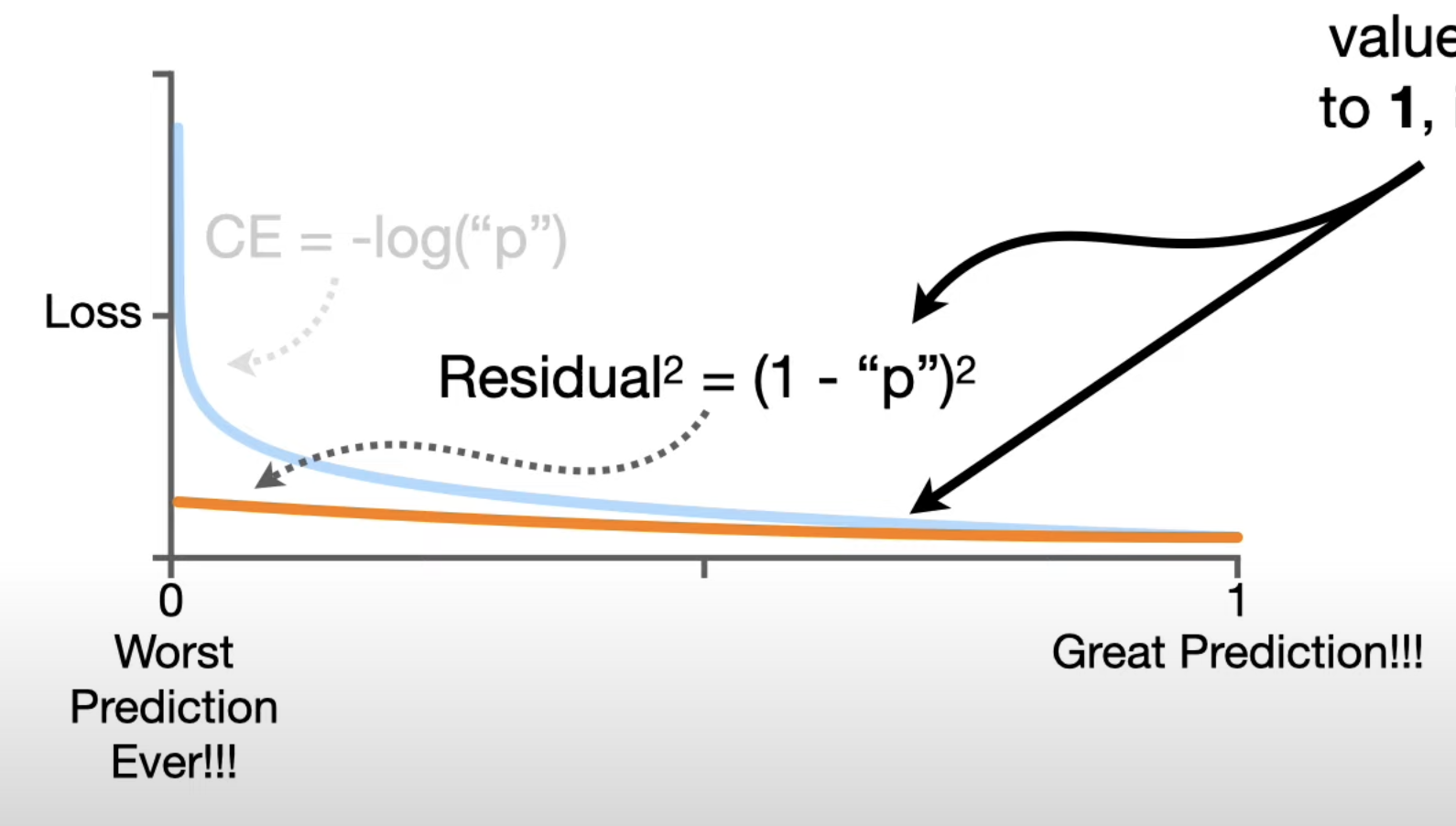

Why Cross Entropy and Not Sum of Squared Errors?

If we have an absolutely awful prediction, the model gets reallllyyyyy punished for it under CE. Not necessarily true for SSE:

Cross-Entropy with One-Hot Encoding:

Whenever you’re using 1 hot encodings, the summation symbol disappears. Your new loss for class has just become:

Wait! Where'd the summation go?

When you use One-Hot Encoding as your classes in classification, the summation symbol disappears. This is because for each class’s prediction, the other classes have a of 0. Then, their corresponding just turns to 0. Neat isn’t it??

Binary Cross Entropy:

The original formula is the general case. But what if we just have two classes (e.g. rain / not rain )?

- General Formula:

- Expanding out with 2 classes:

- Subbing in for accurate :

- Final BCE formula:

- Note: When you’re actually filling this out in practise, you’re only ever filling out one of the sides of the formula. The other one cancels out.