An idea

When referring to any sort of AI Models, we must ask ourselves; ‘What are models?’:

They’re unique abstractions of reality, which we can form any way we choose.

High Level Overview:

As in most of ML, we do not want to create hard If-This-Then-That rules. Instead, we want to create a bunch of tuneable knobs and dials (i.e. weights). We then feed it a bunch of examples and (somehow) these weights get turned in just the right way. We can then feed a never-before-seen example into the model, and it will return with a reasonable output. (Simple Linear Regression is simple example; the knobs are -intercept and the slope.)

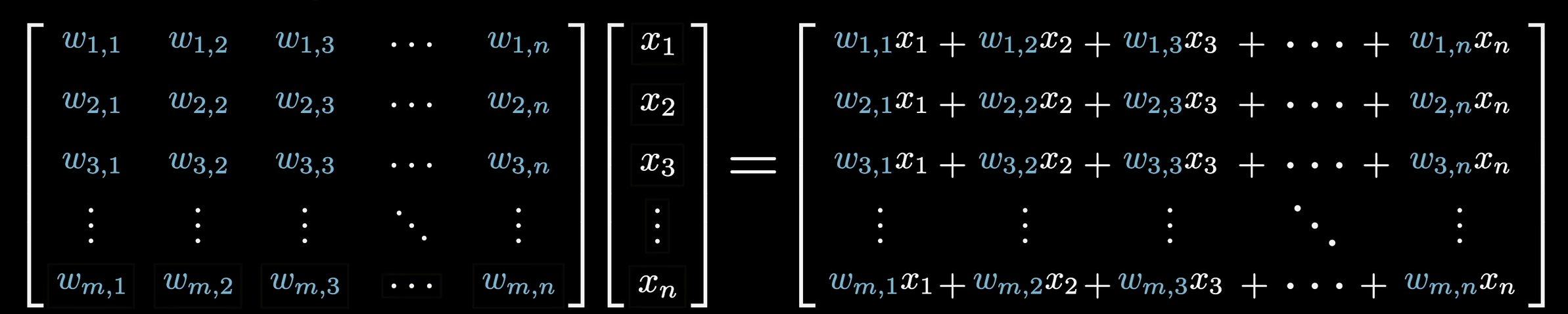

Weights and Biases:

These are a fundamental part of Machine Learning - the knobs and dials. They’re often dubbed “weights” because (as in Multiple Linear Regression), you take the weighted sum. They’re the thing being trained. This is the same as simple Matrix Multiplication, but writing it like this makes life easier for training on GPUs.

Jargon (‘Shargough’ according to lecturer lol)

- Quantities we want to predict are called: dependent, response or target variables. (Often denoted y)

- Quantities we use for prediction are called: independent / exploratory variables, covariates or features. (Often denoted x)

- Parametric Model: A model with some free-moving variables that we can fit so the model becomes better (E.G. Weights in a Neural Networks) - Done by optimising a loss function.

Numerical Diagnostics:

The following formulae are used to assess fits of models.

- Mean Squared Error (MSE) (Note: squares units as well)

- Root Mean Squared Error (RMSE)

-

Coefficient of Determination () (R Squared)

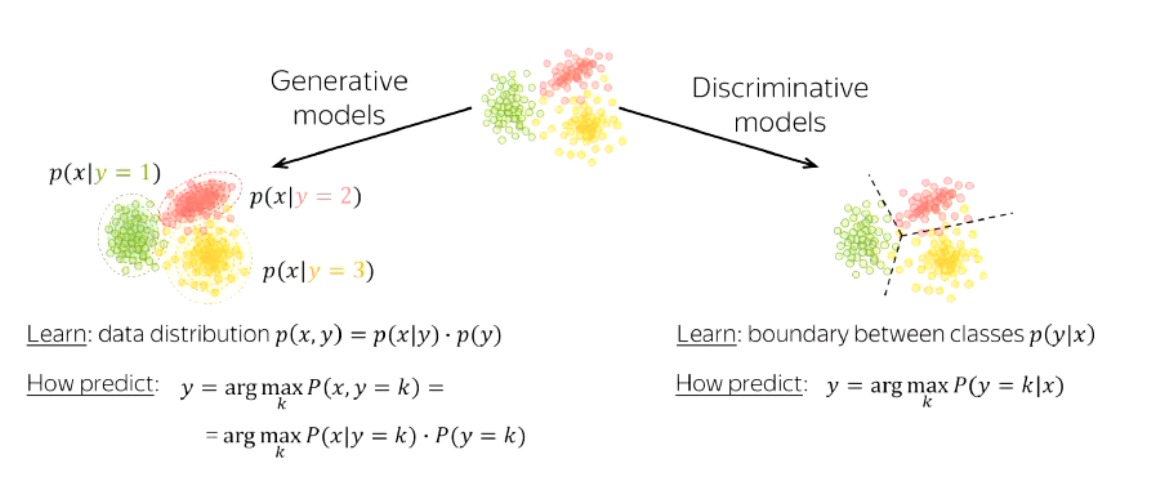

Generative Vs Discriminative:

- Generative: Learns how the labels are generated given the data (i.e. it learns the Probability distribution of data vs labels)

- Discriminative: Learns the decision boundary between the data and labels directly. (I.e. if you were to map every single point on a plane, there would be a “boundary” at which points would fall under a different class).

- Example is Logistic Regression